Here is a copy of the presentation that I gave at API World 2017. I've had the fortune (or misfortune, as the case may be) to work on API platform governance programs for the past several years. This is a culmination of my lessons learned during this time.

I am curious - how do people learn about API practices? Where do you go for new tips and techniques? I happen to be engaged on many fronts. As we go along, you'll see my contact info at the bottom of almost every slide. Please, if anything in this preso here raises questions or you have different insights let's talk about it. Let's have the kind of dialog that should be happening within your companies. Why do we need to help each other figure things out?

Because change is constant. And, in other news, the sky is blue and water is wet, right? We all accept this as being obvious. And yet, when it comes to managing software complexity, API or otherwise, within our organizations, we create rules, processes, and punishments for those things that deviate from our understanding of the world today. We readily acknowlege change and yet build governance as if things won't. We can change the narrative. Governance doesn't have have to be restrictive. Instead, I'm here to paint a different picture, one where governance is an enabler of positive change.

And pick your physical metaphor, be it a radar, or a cycle, but these new approaches are going to continue to come. That's why we're here and why APIs have been so incredibly successful. APIs are a strategy for dealing with change, whether they are web-based, RESTful, or otherwise.

Modularity of systems is a successful approach to dealing with change. Writing to the interface, rather than the implementation, means that when a better persistent data store comes along, or when a better framework presents itself, we can take advantage of that with a minimum amount of disruption to the rest of the company. That said, this isn't about technology. Or at least that is not why it is valuable.

Swapping out a data store under the covers is just intellectual noodling unless it delivers a tangible business value. At Capital One, the modularity that our API program delivers has allowed us to create value on platforms that didn't even exist three years ago. Here we have three very different customer experiences: a mobile native application, a voice assistant, and a chatbot. When we started our API journey several years ago, did we know that we needed APIs to be a launch day partner for the Amazon Echo Show, or that we needed to translate an emoji to a financial transaction? No, of course not. However, being able to execute on an API Strategy has meant being able to deliver the kind of customer experiences that we won a JD Power award for a few months ago.

What does culture change look like? Well, if you're Jeff Bezos, it looks like this. The story goes that sometime around 2002, 2003, he sent out an internal memo to the Amazon staff dictating that all functionality should be exposed via standard interfaces. Anyone that didn't do that would be fired. That culture change to support a strategic API plan is what lead to the eventual success of not only Amazon, but of Amazon Web Services. Now, you all seem like very lovely people. Even though we've just met, I can tell. But, I'm willing to bet you don't have the kind of executive authority to make these kinds of decrees in your own organizations. And that's how we end up with governance, or, all too often bad governance.

Culture is those things that are important to the organization. It defines the personality of the company and how that company response in times of stress. It is the shared belief in how things are done, and what things are important. It is how a company responds to change. Unfortunately, bad governance attempts to eliminate, or at least restrict, the amount of change that can occur at any given time. This is why companies have "approved tech stacks" that everybody must build to. Developers can only use certain frameworks. Or write in specific languages. Or use only one brand of laptop. Governance, in these situations, is not about the enablement of strategic delivery. Or about positive cultural change. Governance, here, becomes about risk management in the face of change. It says "somebody screwed up, let's make sure they don't screw up again". Governance becomes a list of allowances that made sense in the moment in which they were drafted, and not easily changed for future considerations. When all you have is a hammer, every problem to be solved becomes a nail. If you have standards that allow for web APIs, then all problems must be solved by APIs. If you're infrastructure requires protobufs, then a solution that gRPC is optimized for is off the table. Governance, rather than enabling, restricts the solution space. The culture either complies to this governance (thus lacking the capacity to innovate) or, more commonly, circumvents it. That's shadow IT and, by its very unaccounted-for nature, re-introduces the kind of risk this governance was meant to address in the first place.

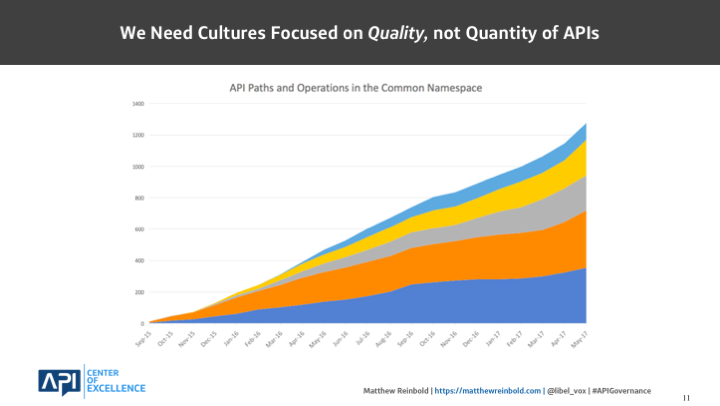

At Capital One, we had an opportunity. This is a small snapshot of our API footprint but it hints the challenges we faced in 2016. We had created a culture that incentivized the creation of APIs. But, as the CoE watched, we became increasingly concerned about whether teams were creating the right APIs. Were these good APIs? Easy to use? Readily available to be combined into new products and services to deliver business value? Or had we created a culture which prized poorly written, unintuitive point-to-point integrations, because that added to the overall count? Had our culture optimized for the quantity of APIs, rather than the quality of APIs? More importantly, if that proved to be the case, how would we change the culture?

As the CoE began to wrestle with the problem, a common question that I heard several times was "Why aren't the teams following the rules?" Among many in our leadership, the assumption was that if we just crafted the "write good APIs" rule, then our problems would be solved. That wasn't going to happen. What we needed, instead, was a systematic approach that incentivized desired outcomes and dynamically, holistically reacted to changes in the landscape.

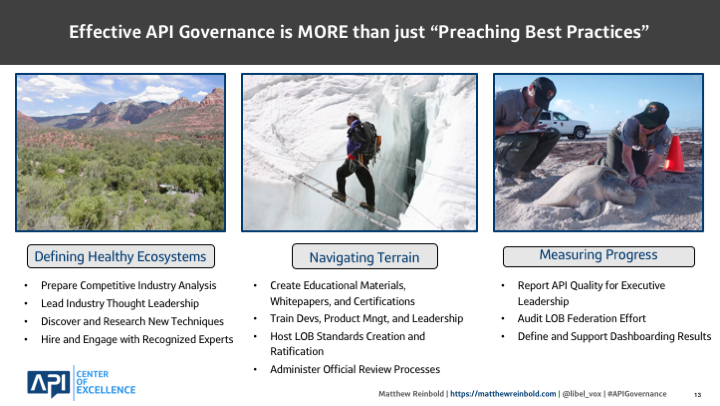

The first part was defining a healthy ecosystem. Applying policy on an API-by-API basis meant we risked not seeing the forest for the trees. Stepping back, we recognized that we were entrusted with a complex ecosystem. And like a complex ecosystem, there were some actions that would help it flourish. There were also things that, like invasive species, could jeopardize the health. Once we felt we adequately understood the terrain, the second part was determining how to guide teams from where we were to where we needed to be. There are many ways to cross a chasms. To do so successfully means acknowledging the existing culture. At Capital One, we have a very heavy preference on powerpoint decks, for better or for worse. Engaging with people means meeting people where they are, on their terms, to start a dialog. The third piece is ensuring that we're measuring our performance. I'm excited to meet my governance peers from other industries. I'll ask them how they quantify success and they'll all too often refer to their published API Style Guide. And I'll ask them how they know that it is positively working. Unfortunately, many don't have a good answer. Without some measurement of performance, a governance effort won't be able to determine progress toward a target state, the effectiveness of initiatives, or illustrate the journey for executive leadership. That is not an effective way to run a program or ensure business value is being created.

Let's dig deeper into each of these parts. Having a healthy, reactive ecosystem does not mean that there are no rules. Reverting to the wild west, where freewheeling console cowboys fire off whenever (and whatever) they want is no more a guarantee of delivering on business value than bureaucratic, dictatorial, and centrally planned systems. Certain things still needed to be defined and enforced. But it isn't all the things. This is the principle of "selective standardization".

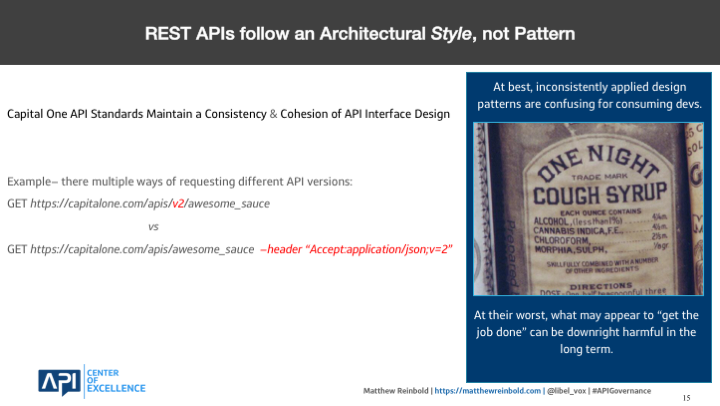

Picking sides is necessary because REST APIs follow an architectural style, not pattern. It's not a specification and that's a big reason you'll find all manner of religious flame wars over this approach verses that. However, for the sake of getting work done, there's a need to identify those things that are important for efficient operations and ensure that their adopted uniformly across an organization. Take API versioning. I have two approaches presented here, but there are more. In this case, we want to ensure that a developer with API experience in Plano can leverage their experience and utilize their learned patterns when they attempt to use an API created by teams in northern Virginia. In this case, it is less about being "right", but selectively standardizing those things that add value in being consistently implemented across the organization. And then, of course, you also want to ensure that standards are protecting the long term health from detrimental practices. Like the pictured cough syrup, there's a lot of approaches that, in the short term, appear to get the job done. Alcohol, cannabis, chloroform, and morphine probably solve the problem in the short term (I'm guessing). But do that on a regular basis and a body will have some serious, long-term ramifications.

I illustrate the fallacy of best practices by talking about a different interface that we're all familiar with, the electrical outlet. Which one of these is the "best" way of conducting electrical current? Hopefully, you'll agree with me that it is contextual; where you are has a lot to do with the interface you're likely to see. But imagine the pain it would be if every outlet in a house had a slightly different outlet. How difficult would it be just to plug something in and get on with work if every interface was seemingly at random? How cumbersome would having to manage all those adapters be? I try to refrain from using the phase "best practice" when it comes to the undefined, or subtle, nuance around API design. To say something is "best practice" strongly implies that everything else is "worst practice". Reality is that it may just be different, but - for the sake of consistency - we haven't adopted it in our house. Instead, I refer to "common practice". The documentation of this "common practice", or selective standardization isn't a bad thing. However, this is where I see many organizations stop. They write an API style guide, publish it, and then wonder why the haphazard adoption by well-meaning volunteers seems to have created more problems than it solved.

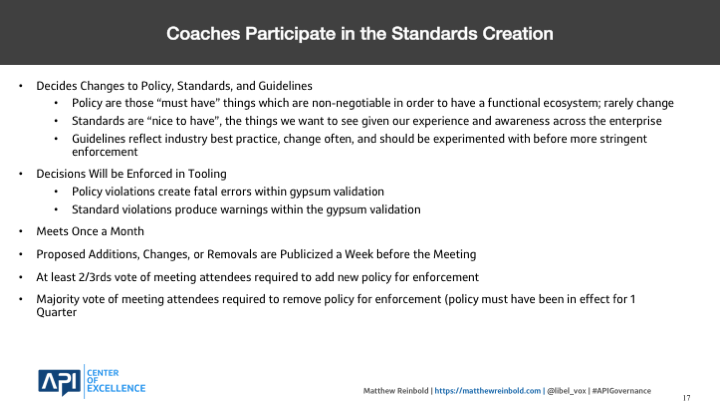

At minimum, the standards must be a journey, not a destination. A key component to "selective standardization" is knowing what to select. It is one thing for us in our ivory tower to throw darts at market forces and team needs. It is entirely another to repeatedly engage with those doing the work. Our coaching effort identifies those passionate practitioners throughout our lines of business who have raised their hands and said, "getting this right is important to my teams and me". Coaches not only receive additional training that they then apply to their teams. They also earn access to evolving our standards. In this way, standards aren't something that are dictated to teams. Teams drive the standards. These aren't alien requirements from another planet. They see their own needs and concerns reflected back at them. That is an incredibly powerful motivator toward acceptance and buy-in.

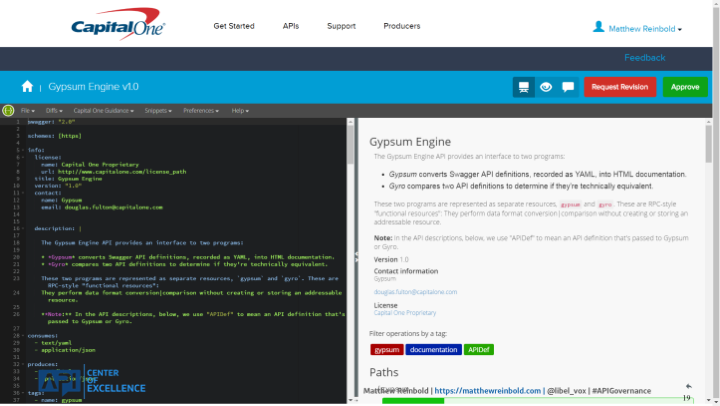

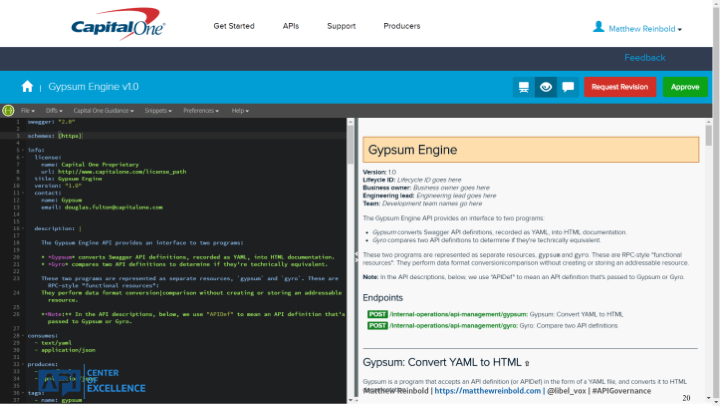

After identifying some aspects of a healthy ecosystem we now need to help teams traverse it. Each team is at a different point in their journey, and different points of an API lifecycle require different interactions. At Capital One, we have a four-phase lifecycle for API development: planning, design, development, and deployment. An OpenAPI description is the artifact that progresses through the process. Not only is it used to capture the team's intent, but approved, machine-readable descriptions are what enable automatic publishing to a service discovery portal and provisioning to our API gateway.

When they click on the 'eyeball', in the upper right, teams get a preview of what their documentation will look like in the service discovery portal. We had different needs in how we wanted our content organized so we provided a different rendering to the default Swagger UI. It is during this preview that any violations to those "selective standards" are called out. These are presented to teams so that they can fix the errors prior to engaging my team in design discussions. This is incredibly powerful. Rather than spending time pointing out that teams can't submit a body as part of an HTTP GET request, for example, reviewers are freed up to address higher level concerns (more on that in a moment).

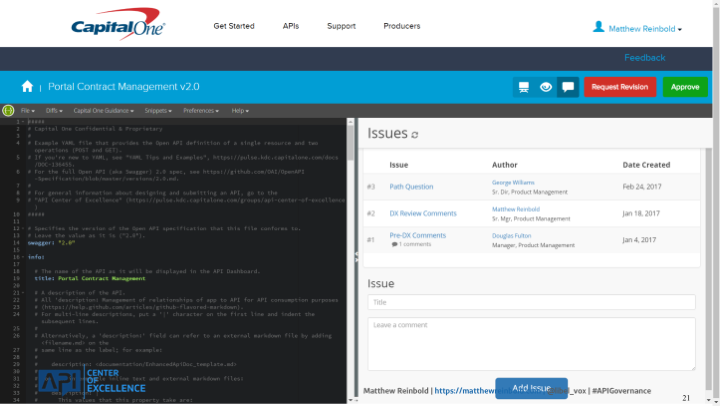

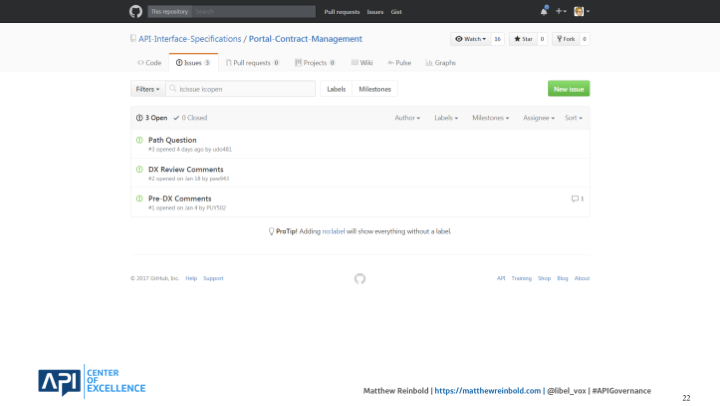

Those discussions are captured and maintained alongside the API description. The history of how the API design evolved to be the way that it is can be seen by clicking on the word balloon. Previously, conversation would get lost in email threads or chat channels. Not only was that incredibly hard for people to track down if they had questions later, but that history became a black box for new team members. Without the history, they had no insight into what were highly contested aspects that made the API what it was, and which pieces were fair game to be changed.

The lifecycle tool uses an enterprise instance of Github as the system of record. So, in addition to saving the conversational discussion, we can also easily do diffs between versions of an approved API design. Having an API design artifact that moves through a lifecycle, with transparent and maintained history, enables collaboration. But what is the direction we're moving teams toward?

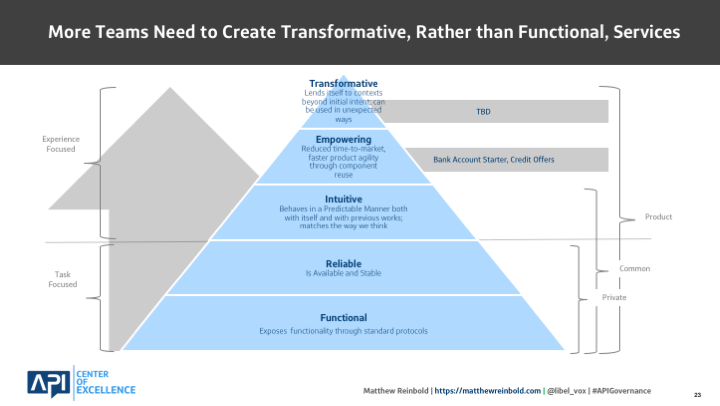

When I need to illustrate web API design maturity, I've started to use a pyramid. At the bottom are those APIs that provide some functionality over HTTP and little else. The next level are those APIs which don't just expose functionality, but are also reliable. An APIs that is down Thursday nights from 3-5am because of backend batch processing may be functional, but not as reliable as we'd like. Likewise, if an API can't (or won't) scale to meet demand, it also isn't reliable. Intuitive APIs are where we begin to see thought put into the developer experience (or DX). At this level we're thinking outside-in, rather from the data record-out. Key traits are behaving in a predictable manner, and exhibiting the selective standardization that we previously defined. Moving a tier above that are empowering APIs. Beyond just having a good developer experience, these APIs also contribute direct, or nearly direct business value. In the case of our Bank Account Starter and Credit Offer external APIs, we can trace significant new revenue and accounts booked through affiliate usage. These APIs have grown the funnel, or empowered, our existing lines of business in meaningful ways. At the top of the pyramid are transformative APIs. These are rare, but powerful. These are APIs that don't just expand existing lines of business, but open up brand new opportunities. We're currently working on some ideas but, as I said, these are some of the hardest APIs to create successfully. Should all APIs that we product be transformative? No. That's why the pyramid is shaped the way it is, with more reliable APIs than intuitive, more intuitive than empowering, etc. What is important is that, during collaboration, we look for opportunities to advance the work; if a team only has a functional API, can we make it reliable? If it is functional and reliable, can we also make it intuitive?

The last point I'll make about helping teams navigate this landscape is that simply posting an API style guide, or sending out a firmly worded email is a poor substitute for rolling up one's sleeves and engaging directly with developers. Whether it is San Francisco, in the top picture, northern Virginia on the left, or in Plano, TX on the right building trust is still something done best face to face; trust that the API CoE has the developer's best interests at our heart, and trust in our developers to do the right thing. Believing that API stands for "assume positive intent" is much easier when you've seen eye to eye with someone. After we've left, it is the responsibility of the coaches to continue being the approachable, friendly face devs work with on their questions and concerns. They are what turn a one-off training into an ongoing, living discussion in their respective areas.

Enough of this Kumbaya crap. It may sound like a great story. But how do we know it's working? Whether it is because it is hard or because it is an afterthought, meaningful metrics at the API program level are hard to find. And that is unfortunate. Business leaders have invested years, and sometimes tens of millions of dollars, towards service development. When asked if these technology initiatives have been successful, these same leaders have little to point to other than statements like: "I've deployed 47 APIs this year, and my peer on the west coast has 30 in production, so my API folks must be better". Hearing it out loud, hopefully that sounds as ridiculous as it is. But, in the absence of any other KPI, they go with what they have.

As this quote by Tom Peters implies, metrics don't exist in a sterile clean room. They actually drive behavior. Metrics can drive culture change in an organization but they must be carefully monitored for unintended first (and second) order effects. I want emphasize that the following metrics I'm about to cover are meant to address specific concerns that existed as we surveyed our landscape. Your company will be different. Attempting to implement these without consideration of your specific situation is likely to lead to disappointing results.

As we stepped back and attempted to answer what makes a good API, we saw three categories of numbers emerge: conformance, performance, and transformative metrics. Conformance is the adherence of an API design to our standards. These are the rules and usage patterns that can be checked for, automatically, in our lifecycle tooling. Performance is how responsive an API is when called. These are easily quantifiable metrics and often the first numbers that people think of when reaching for numbers. Together, however, conformance and performance are not enough. Just meeting performance expectations without deviation from the rules doesn't make a great API. It is like typing away at a word processor with an expected words-per-minute without creating a spelling or grammatical mistake; while you might not have any squiggly lines anywhere on the page, it doesn't mean that you've written a compelling story. That is where the transformative metric comes in. This component, which I'll talk about in a moment, allows us to capture the subjective nature of the APIs in a way that can be tracked and acted upon.

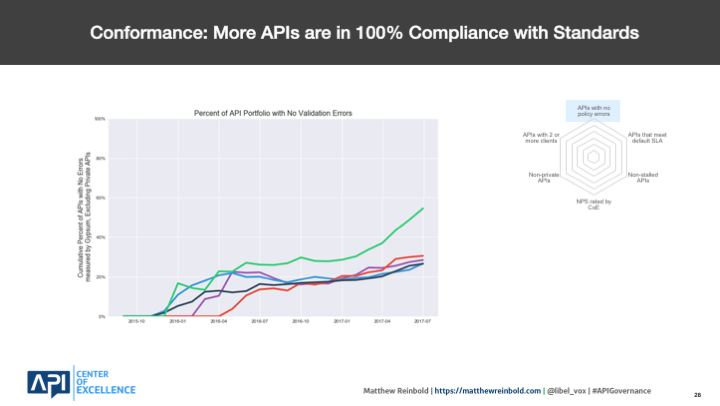

In this first slide, the number of APIs submitted to review without any deviations from our standards are broken out by LOB. For much of 2016, many of our producing devs were submitting things to collaboration that only were free from policy deviations only 15-20% of the time. My team, subsequently, had to spend precious consultation time pointing out discrepancies rather than on moving to transformative experiences. Given our desire to move teams up the quality pyramid, this was a problem. In January of this year, we introduced self-service validation into the tool that could be done prior to submitting for review. With this capability, development teams are able to correct their mistakes on their own time, and the CoE can devote more of the review to higher order tasks.

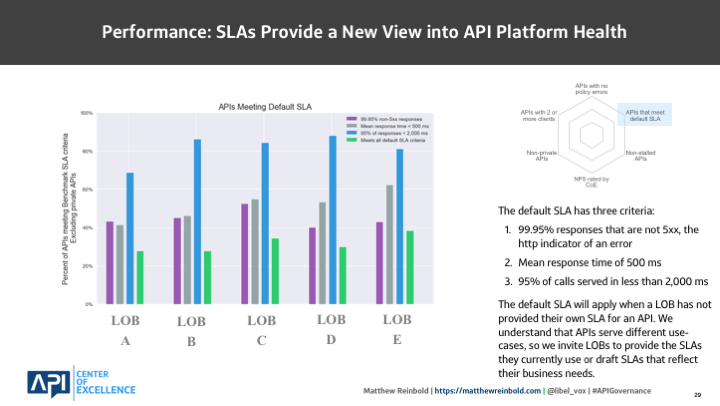

A vast majority of the APIs that Capital One produces are for internal use only. And while our external work has clearly defined service level agreements (SLAs), in a weird quirk, many of our internal APIs do not. This concerned my team for awhile but when we'd ask for someone to define them, we'd never hear back. So we took the opportunity to define our own in order to create a culture change. We defined a three-pronged SLA: 99.95% of the responses should not have a status code in the five-hundreds, the mean response time needed to be under 500 milliseconds, and 95% of the calls served needed to be less than two seconds. We spent time deriving these values but, ultimately, they were a guess on our part. But their very existence means teams now have to begin considering whether their APIs meet this behavior. And, for teams that have considered their performance and published their own SLAs, we happily apply those numbers to the performance that we can see.

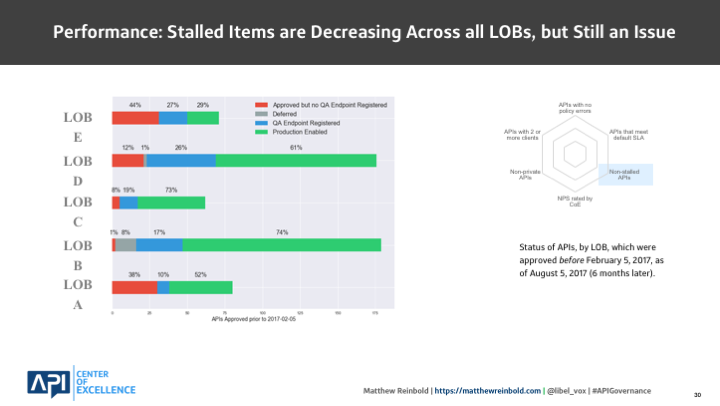

Another example where we're using metrics to drive cultural change is with what we call "stalled" APIs. These are API descriptions that development teams begin but, six months after the design has been approved, still haven't made it to production. While we had a story here or there guessing what might be happening, this metric allowed us to capture the effects of this behavior at a LOB level, as well as identify whether it is getting better or worse. Is this the result in shifting product strategy? Frequent team disbandment and reorganization? Before we could start addressing those problems, we first had to identify that we had one.

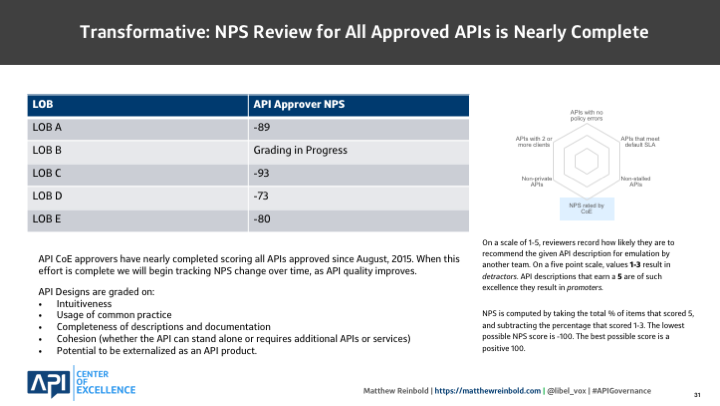

NPS stands for net promoter score. It encapsulates a variety of the subjective nuances comprising an experience. It is very handy in capturing the "goodness" of an API design. There was a couple of ways we could have approached this scoring. However, executive leadership was already well versed in what NPS is. We've used it forever to gauge the customer experience on a variety of our services and products. It is not enough to just ask a person scoring an API "is this good?". One of the subtly clever aspects of NPS is that it reframes the question to put a reviewer's professional reputation at stake. Rather than asking "Do you like this API?", NPS asks "Would you recommend this API to your peers?". Everyone likes to think the are arbiters of style and distinction. As such, they take great pains to not recommend sub-par experiences. The lowest possible score for NPS is -100 and our sample scores show we can do better. However, those scores aren't surprising given that we've been producing little more than functional and reliable APIs for years. The lack of higher-order, transformative APIs shows up in these scores. But now that we're measuring, we can track whether ongoing initiatives are making it better or whether we need to do something else.

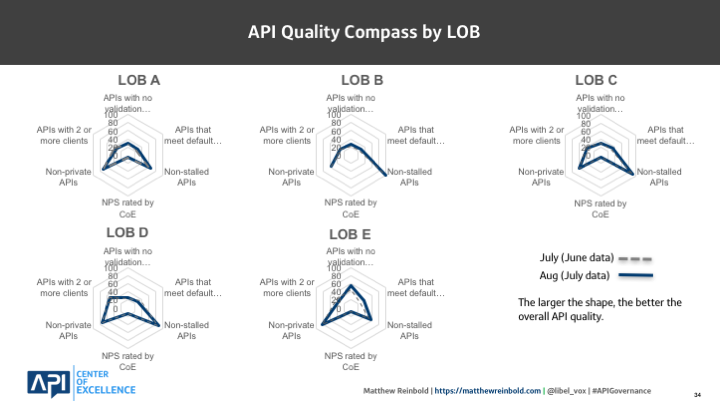

There are some additional metrics that I won't mention here. The point is that these are an ongoing conversation. When a metric outlives its usefulness, or we find it is incentivizing the wrong behavior, we change. We take all of that data and boil it into the single visualization you see here. These radar plots allow leadership to, at a glance, see their organization's API quality relative to their peers. As quality along an axis increases, the shape grows outward, like a balloon.

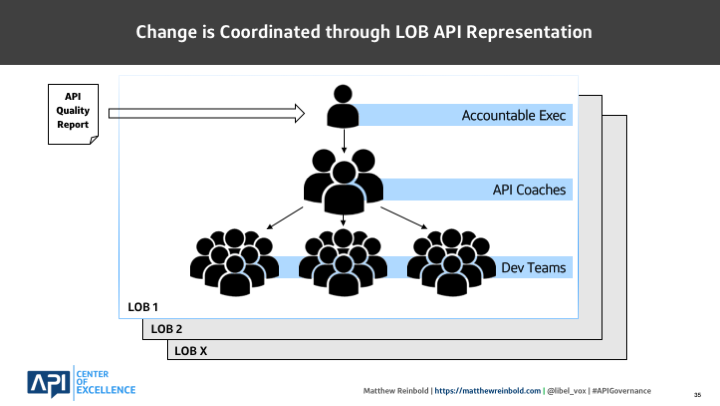

The CoE packages these insights together once a month and sends them to the accountable executive over each line of business. Usually this report includes not only observations, but tactical items that can be done to positively impact a metric or metrics. The executive directs coaches within their area to implement those changes, if they deem them necessary. Finally, the coaches then work with their development teams.

That is a brief overview of how we've attempted to create a governance program that is change enablement while incentivizing culture change. We define healthy ecosystems, help teams navigate that terrain, and then measure our effectiveness along the way. A common comment that I hear, at this point, is that "This sounds great, but we don't have the budget for this". Or "we need a bigger team". I'd agree; if we assume that making a massive change can only happen with a massive amount of resources, then we're likely to end up on the unpleasant end of a political tug-of-war.

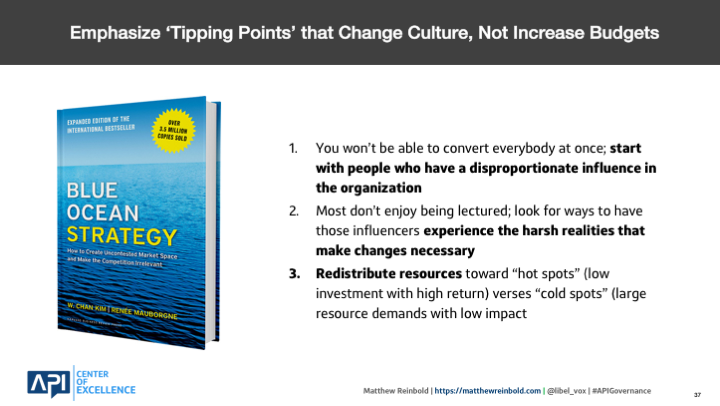

In regards to doing more with less, and seeding the cultures to make that approach viable, I highly recommend the book, Blue Ocean Strategy The majority of the book is about identifying new business markets and, while interesting, that's probably not what most people in this room do. However, the last third talks about creating successful culture change in order to compete in those markets. It is amazingly relevant to discussions on software governance. One of the techniques it discusses is the relationship of kingpins, fishbowls, and horse-trading. Going back to our API quality report work, we don't keep those numbers to ourselves. From the beginning, the distribution was about getting the report to the people, the kingpins, with the authority (and the responsibility) to act on the findings. Next, the report acts as a fishbowl where each of the kingpins can see how the other is doing. In my experience, executives didn't get to where they are without being a tad competitive. Publishing results and easing comparative analysis creates a natural driver for action; each of the leaders wants to be number one among their peers. The final element is horse-trading. Over time, sometimes by sheer inertia, certain areas accrue an abundance of resources despite requiring different resources. And my CoE doesn't have all the time or energy to produce everything that we feel would be impactful. In these cases, we can approach a LOB that is doing great work in a specific area and offer to share their story as an example for the wider org. In return, we ask for help producing those insights we may not have been able to do otherwise. We have an audience. They have manpower. A careful audit of what one has and the judicious application of horse trading can result in the kind of effects one might only associate with a "bigger budget". (Update: 2020-03-25) Since publishing, I have read a number of additional books unpacking digital transformation and shifting corporate behavior. Two of the best are Agendashift, by Mike Burrows, and Switch, by Chip and Dan Heath.

In conclusion, change is constant. If the role of governance is to arrive at the perfectly optimized system for today, we will find ourselves perpetually frustrated tomorrow. Day after day, year after year, that theoretical perfection will seem more and more distant. Instead, if we look to governance not as a destination, but a journey we are on, then change is just part of the landscape.

I'll end on one final Tom Peters quote: "Excellent firms don't believe in excellence - only in constant improvement and constant change." Build a culture whose governance processes are optimized for change.

Thank you for your attention. My name is Matthew Reinbold. I am the Lead for the Capital One API Center of Excellence and, if you'd like to continue this discussion my contact information is listed here.

That's it for the presentation. But, if you're interested in more, check out the most commonly asked questions (and answers)