In previous posts, I’ve discussed how one’s environment impacts behavior, how defaults and templates are part of a software development environment, and possible behavioral prompts to consider in an OpenAPI template. But what do irresponsible or counter-productive environmental prompts look like? The recent kerfuffle over Github’s Copilot AI is a great example.

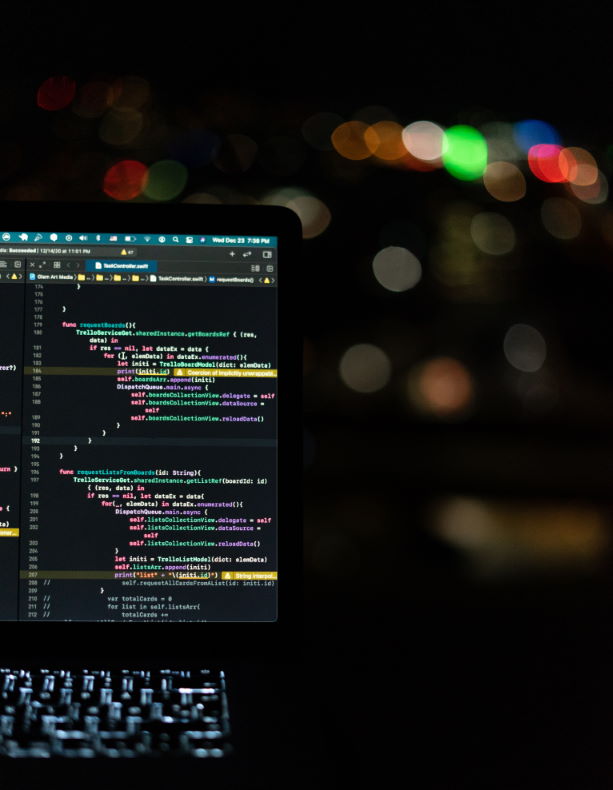

I discussed Copilot in a recent edition of my API email newsletter, Net API Notes #168. In short, Copilot is an assistant for writing code. As a developer types in their Integrated Development Environment (IDE), Copilot suggests “whole lines or entire functions” learned from similar found across Github.

On the surface, this seems like an example of using the environment to prompt behavior. When it recognizes what the developer is attempting, Copilot prompts a solution similar to what others have done previously. Being the path of least resistance, the developer (particularly a junior one) is likely to accept the default provided and move on to their next task.

In that scenario, however, what outcome is the environment optimizing for? Quality or convenience? If we consider how Copilot was trained, we begin to get an answer.

From a published paper and subsequent tweets, it appears Copilot trained on all publicly available Github code. As someone who has contributed their share of broken, test, and experimental code to Github, I can guarantee that not everything publicly available follows best practices. Further, as I discussed in my overview on machine learning’s API future, Copilot’s algorithm can’t discern which common programmatic approaches are good, only those that have occurred historically. It then, subsequently, propagates those with its promptings.

What does that mean in practice? As an industry (and with APIs specifically), software developers are still wrestling with comprehensive, security-minded practices. It should come as no surprise, then, that Copilot ends up prompting the same kinds of security flaws that have bedeviled the industry throughout the past. A security researcher studying the issue says Copilot suggests:

“-bad code that looks reasonable at first glance, something that might slip by a programmer in a hurry, or seem correct to a less experienced coder.”

In a more API-specific example, from the same article:

“The AI tool also ‘blundered right into the most classic security flaw of the early 2000s: a PHP script taking a raw GET variable and interpolating it into a string to be used as an SQL query, causing SQL injection’”.

It is easy to optimize a system for speed. However, using machine-learning to create environmental prompts isn’t automatically value-adding activity. Designed poorly, it becomes the equivalent of throwing more spagehetti at the wall. When considering what behaviors an environment creates, don’t lose sight of the quality outcomes.