TL;DR -

The problem isn’t coding with GenAI. The problem is what coding with GenAI lets us get away with. It allows us to delay making real choices, real trade-offs, and real systemic changes by giving the illusion of movement and acceleration. In a moment where maintenance, curation, and simplification are the actual leverage points, we’re rewarding copycat output. Not because software developers are stupid or short-cited, but because our incentives, our markets, and our social platforms have been rewired to reward volume over truth, sizzle over substance.

Upfront Disclosure: I use generative AI tools almost every day.

Many otherwise genuine criticisms of AI are undercut when you discover the author has no experience with the tools, and is wailing on strawmen of their own design.

I will state clearly up front that I use generative AI (genAI) nearly every day. In fact, this piece was developed with the help of large language models. As a remote worker, having a tireless, talkative partner to rubber-duck with is essential. It’s like a writing circle, albeit one that’s available 24/7, never gets hangry, and has perfect conversational recall. Even just having to articulate half-formed intuitions or unspoken feelings into a prompt is beneficial. Beyond that, something like ChatGPT is great for identifying weaknesses in arguments or highlighting hiccups in flow.

However, because I use it regularly, I see its limits. GenAI is beneficial for what it is: a fast feedback loop, a pattern spotter, and a corpus summarizer. What it is not is a substitute for the hard work of making trade-offs, reducing complexity, or building healthy systems.

And yet, in boardrooms and strategy decks across the tech industry, GenAI is rapidly being positioned as precisely that: a cure-all for reduced budgets, ballooning backlogs, and stressed teams struggling to maintain momentum. There’s a tremendous amount of hype, and seemingly little critical thinking, which is understandable if you think about it for a moment; if you’re drowning, you don’t critique any potential lifeboat. But that doesn’t mean you’re destined for solid ground.

The AI hype has only grown.

What you’re hearing about genAI isn’t just due to your leadership, but an entire ecosystem of misaligned incentives. Fred Hebert, a Honeycomb.io SRE, recently pointed out:

“The usefulness and success of using LLMs are axiomatically taken for granted and the mandate for their adoption can often come from above your CEO. Your execs can be as baffled as anyone else having to figure out where to jam AI into their product. Adoption may be forced to keep board members, investors, and analysts happy, regardless of what customers may be needing.

“It does not matter whether LLMs can or cannot deliver on what they promise: people calling the shots assume they can, so it’s gonna happen no matter what.”

That’s … not great. But add to that the “macroeconomic headwinds” howling since 2023 and suddenly tech leadership’s job is to make the line go up with fewer reporting lines. Releases are slipping, infrastructure is groaning, and the most experienced engineers are leaving for places that didn’t mandate a return-to-office (RTO).

It is hard to ignore cheerleading coming from the likes of Goldman Sachs’ CIO, who recently named developer productivity the #1 GenAI use case. Similarly, Google says its engineers are 10% more productive. And when LinkedIn is abuzz with claims like, “GenAI made development 2x faster and restored my hairline”, your leadership’s first thought isn’t, “At what cost?” but “Where do I sign?”

And why not? The pitch is irresistible:

- No reorgs

- No vendor lock-in

- No cultural transformations

Just waive some corporate card expenditures for Copilot and watch the codebase commits fly.

But here’s the trap: developer productivity sounds like a smart and measurable goal. However, most software teams aren’t drowning because they can’t write code fast enough. They’re drowning because they don’t know which code to write, they’re afraid to touch what already exists, or they’re unclear about how what they’re doing creates business value. The systems they’re tasked with modifying resemble a Rube Goldberg machine; one where half the mechanisms are on fire, the other half are forgotten legacy components, and the third half was recently added in an acquisition and needs to be integrated yesterday.

The conversations they’re having sound like this:

In other words, the problem isn’t throughput. It’s clarity. And when you chase productivity without clarity, you just accelerate the mess.

“Ship more” sounds great in a slide deck. Until you realize you’re shipping complexity faster than your team can understand it.

Shipping more, faster is a recipe for disaster.

In his series about simplifying architecture, Uwe Friedrichsen summarizes the situation:

“We are pushing to do the wrong things better and better.”

When GenAI is brought in to “fix” developer productivity without anyone slowing down to ask what’s actually broken, things only get worse. Because GenAI is the ultimate enabler of “just one more”.

More code, more endpoints, more opacity stacked on legacy systems, generated in milliseconds by tools that have no idea about what is right or wrong for a given context, only probabilistic matches for code in its training data.

That may work for the seven-thousandth example of a social network client. But the more unique the context, the less capable the help.

GenAI isn’t replacing developers; it’s replacing decision-making.

There’s a whole separate article about how companies use GenAI hype to justify layoffs and then quietly rehire for the same roles at a cheaper, overseas rate. Assuming your company isn’t attempting a stealth outsourcing, GenAI isn’t actually making developers obsolete. It is making choices obsolete.

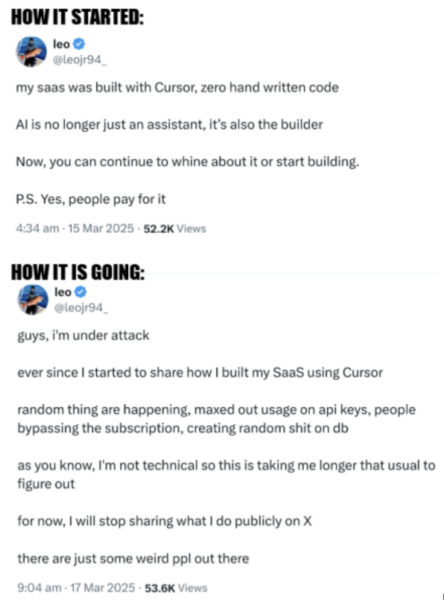

When you drop Copilot or CodeWhisperer into a team that’s already under pressure, you don’t get thoughtful design. You get code at the speed of vibes. Half-understood prompts, half-documented results, and auto-complete-driven architecture. Everyone nods along because nobody wants to look like the one standing in the way of “the future”; or at least, there’s little professional benefit.

Take Fly.io. Fly.io recently published a piece about how they’ve incorporated genAI into their development process. In essence, they claim, “AI lets us build faster, even though it makes the code worse, so we’re just trying to slow down enough to make it usable”.

In response, Icelandic web developer and consultant Baldur Bjarnason points out this isn’t productivity; it’s “lowering the quality bar so we can high-five each other for clearing it”. Or said another way, it’s not that the code is good. It’s that it is fast, and nobody has time to care anymore.

This isn’t just a quality problem; it’s a strategic one. Because when GenAI becomes your default way to move forward, you stop choosing what’s worth building. You just … keep building. Faster. More often. Which only compounds problems:

- Developers stop questioning the architecture.

- Product teams assume delivery is “unblocked”.

- Leadership sees velocity graphs going up and assumes it’s working.

Meanwhile, you’ve automated yourself into a corner, a bigger, messier, tech-debtier corner, but a corner nonetheless.

Solving systems complexity requires a different kind of leadership.

Dunking on the AI hype is easy; there’s several extremely online types that have made it their entire personality the last several months. It’s harder to conceive of something better, especially when your calendar is full, your team is thin, and your CFO wants you to explain why your delivery metrics didn’t improve after you gave everyone robot interns.

I will say you’re not crazy for wanting relief. You’re just not going to find it in autocomplete.

What your org needs isn’t “more”.

It needs maintenance. It needs curation. It needs simplification.

These aren’t sexy initiatives. They don’t demo well. You can’t put “we deleted fifteen endpoints” in a roadmap review and expect applause. But if you want your team to keep moving in five months, let alone five years, this is the work that matters.

You don’t need a new platform or a tiger team. You need a shift in instinct.

Five Behaviors of Leaders Who Choose Resilience Over Vibes

1. Default to Deletion

Before you greenlight another service, ask what can be shut down. Before you automate a workflow, ask whether it’s still necessary. Before you ship more, try shipping less. On purpose.

We don’t need to do more faster - we need to do less, better.

2. Reward Understandability If you can’t explain it to a new hire in ten minutes, it’s a liability. Stop rewarding clever hacks. Start celebrating clarity.

Elegance is not optimization. It’s comprehension.

3. Defer Decisions That Add Complexity Postpone what you can. Push back on “just in case” extensibility. Favor defaults. Shrink option surfaces. Don’t widen interfaces unless there is an explicit use case.

Every new option is a tax on future understanding.

4. Curate What You Have Prune your internal APIs. Archive stale docs. Audit your SDKs. Stop showcasing how much you have. Start showcasing what actually works.

If you don’t curate your ecosystem, you’re just letting complexity run the museum.

5. Ask: “What If We Did Nothing?” This one is counterintuitive but powerful. Not everything needs a fix. Sometimes, the bravest thing you can do is wait.

Anyone can stomp on the gas. Leadership is knowing when to pump the brakes - and when to get out and walk around.

This is what stewardship looks like. It’s not loud. It’s not flashy. And it won’t get you featured on HackerNews. However, it may just give your team a fighting chance.

Maintenance is the new moonshot.

If this is the sustainable, pragmatic way forward, why are so few actually talking about it?

Again, I go back to Hebert’s piece, as he describes the situation quite concisely:

“Things won’t change, because people are adaptable and want the system to succeed. We consequently take on the responsibility for making things work, through ongoing effort and by transforming ourselves in the process. Through that work, we make the technology appear closer to what it promises than what it actually delivers, which in turn reinforces the pressure to adopt it.”

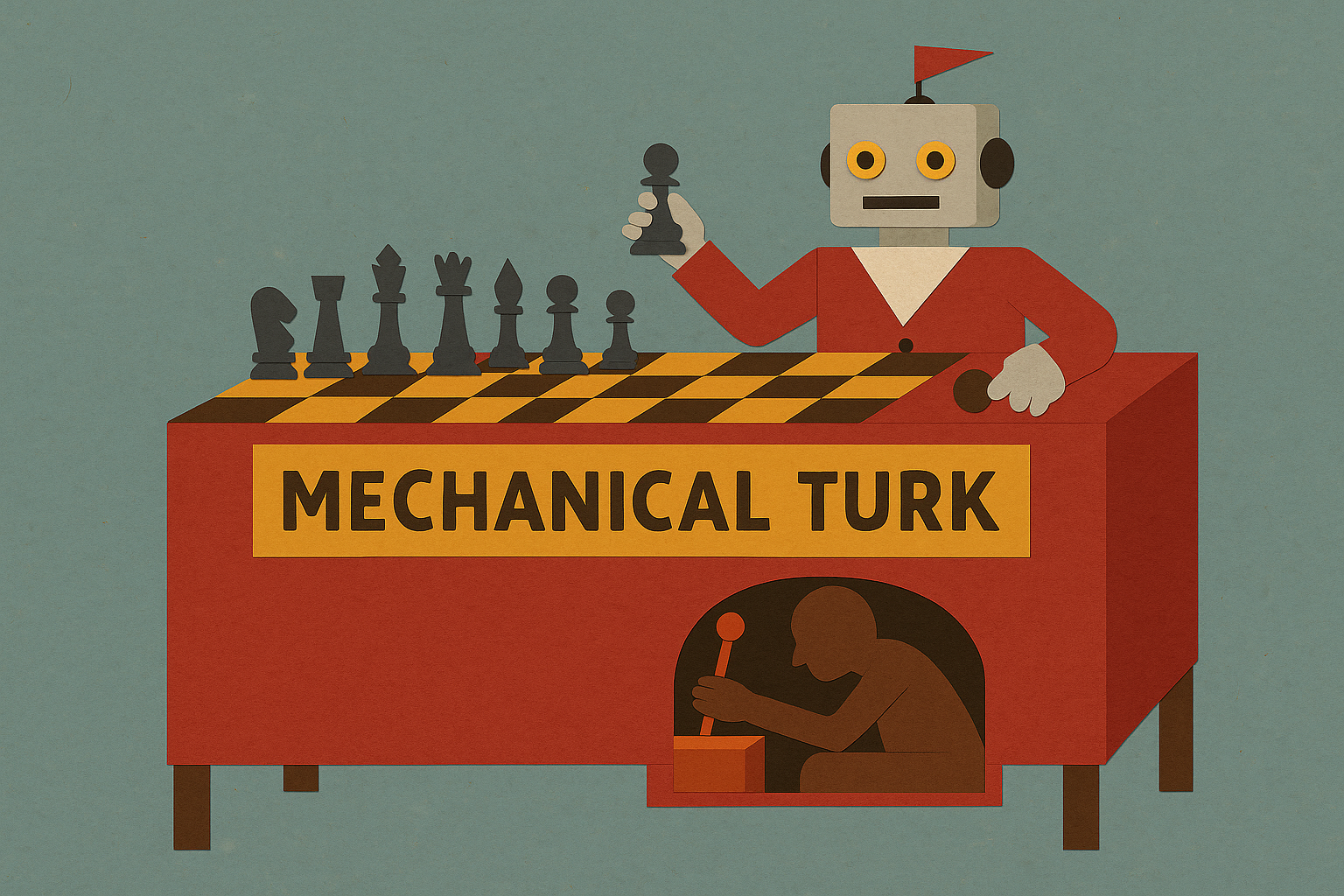

In a sense, the responsibility for realizing AI’s hyperbole is delegated to its human implementers. And if the Mechanical Turk fails to deliver, it is considered the operator’s fault, not the technology’s.

Meanwhile, there are no TED speaker slots for “Doing Fewer Things Slightly Better.” No book deals for “Slow Architecture”. VCs aren’t throwing term sheets at companies promising to do less.

But that doesn’t make it any less critical.

In a world obsessed with acceleration, choosing to maintain - to understand, to simplify, to prune - is now a radical act. It’s leadership that resists panic. It’s a strategy that values longevity over the next quarter’s dashboard.

GenAI isn’t the enemy. But misusing it to paper over hard choices - to avoid trade-offs, to compile more complexity, to pantomime progress - that’s how we break things beyond repair.

The systems we build reflect the choices we make under pressure. Choose maintenance. Choose clarity. Choose to lead.