My work on pragmatic software governance has evolved. It started with a focus scaling good API design across a modern enterprise company. Increasingly, I'm interested in how culture impacts technology adoption. Why are do some approaches become deeply embedded within an org, while others disappear after a hot minute?

I gave this talk several times this past fall - most notably at APIStrat (Nashville) and API City (Bremerton, outside Seattle). For many, the content may have been unrelatable; what's all this talk about ecosystems when one is only submitting pull requests to a single branch? For a select subset of enterprise folks, however, these issues of scale and co-habitation loom large. There's also few resources available. Hopefully, this post will provide some insight into what they're seeing based on my own experiences.

Hello everyone. My name is Matthew Reinbold and the title for this talk is "A Gardener's Approach to Growing an API Culture". If that topic sounds a bit different, that's because I hope it is. And I'm guessing that it might have attracted an audience in search of something a bit different. What this talk is about is APIs at scale. What considerations occur when you go from managing "a few APIs" to being a gardener of an ecosystem?

I don't know how big your organizations are but, no doubt, you've seen an increase in the level of software complexity. Also, our collective industry has adopted some very nasty habits; everything from move fast and break things to resume-driven development. How do we cultivate software design that, as Fielding described, has a "scale of decades" when the average developer tenure at a company is a year and a half? What I hope to do in this talk is share some of what I have found to be resilient about an API culture, make it relatable through the use of a garden analogy, and share some techniques for those grappling with ongoing technological change within their own organizations.

For the last several years I've been the Director of the Capital One Platform Services Center of Excellence. My team and I are responsible for gardening a complex, distributed-systems ecosystem. Capital One has around 9000 developers that we work with on API and event streaming designs, standards, and lifecycle management. These developers are spread across numerous North American locations and multiple lines of business. They've produced thousands of APIs that we manage with our own infrastructure, resulting in more than two billion request/responses a day. And our messaging infrastructure is on pace to surpass the historical adoption trends we experienced for internal APIs. My team and I are responsible for software process management. We build shared communities of practice and federated responsibility. We've used centralized design management to cross-pollinate the best ideas and multiply infrastructure impact across the organization.

In addition to powerful internal functionality, our investments into distributed architectures have allowed us to: Distributed systems aren't a theoretical gamble for us. It's who we've become as a culture. It's how we win in the competitive marketplace. We are reaping the fruits of our labor. And increasingly, I'm not just interested in the harvest that we have, but how we keep it healthy and prosperous going forward.

I like this image – it takes many of the components of a healthy garden and breaks them up into their individual components. We can have a greater appreciation for all interplay that occurs within one of these systems. I'm guessing most people here would be familiar with a garden ecosystem. There's the ground, which includes the mineral composition, the producer plant life, and the consumers - both the animals attracted by the plants - the worms, the bees - and the second order consumers - the snakes, the spiders, etc. I glossing over a tremendous amount, and probably doing a disservice in the process, but an ecosystem can be complex. But ecosystems don't just happen. Throwing seeds on the ground and coming back months later expecting something to harvest isn't statistically likely. And micro-managing the interplay between the numerous participants in such a complex environment doesn't scale. [Image taken from https://blog.grovegrown.com/what-grove-does-differently-to-get-people-growing-cd6a71f39864]

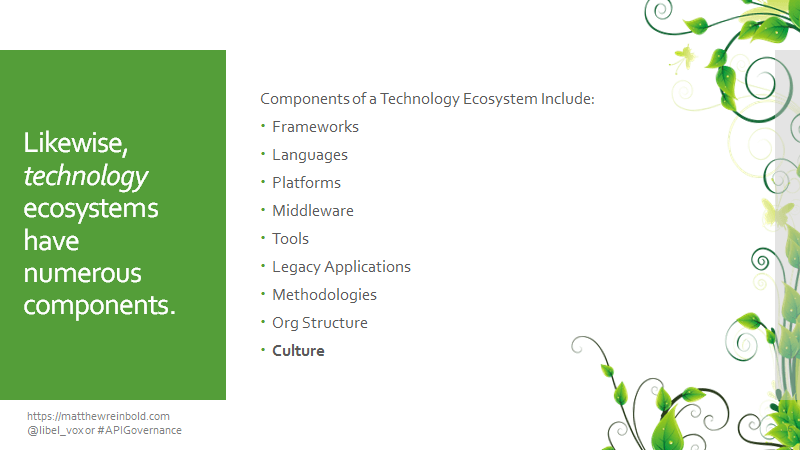

Likewise, technology ecosystems have numerous components. Attempting to address only one of them, while ignoring all the other factors, is like attempting to make a garden flourish while only being able control the flow of water. What is adequate during times of drought or flooding does little to deal with insect infestation, or an invasive species. [Breakdown of elements taken from Matt McLarty's presentation, "Design-based Microservices, AKA Planes, Trains, and Automobiles", slide 42]

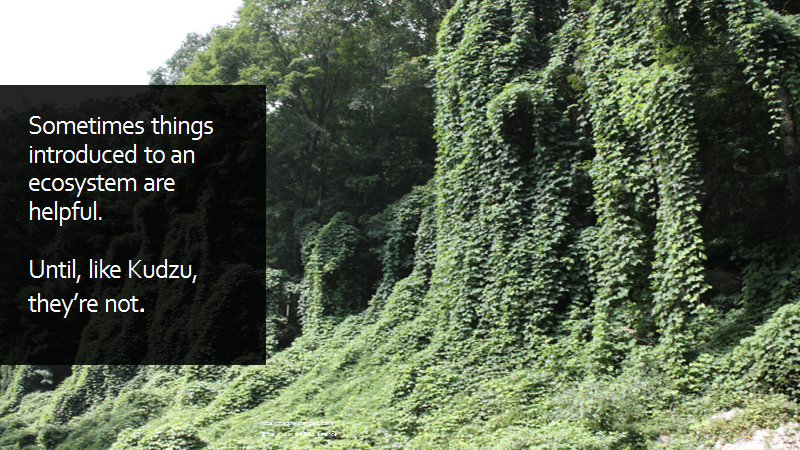

Kudzu is a Chinese vine introduced by way of Japan to the United States. It was a perfect match for the climate of the southeastern United States. In the right conditions, a Kudzu vine can grow almost a foot a day (or between 19 to 30 centimeters). During the dust bowl of the 1930's, planting Kudzu was encouraged as a way of preventing soil erosion; it's addition to the ecosystem was a desirable thing. And then the ecosystem changed. Rain returned and the places where Kudzu had taken root suddenly had themselves a problem. It grows so fast it smothers native plants and trees, drastically reducing their access to sunlight and water. Left unchecked, Kudzu will devastate ecosystems that are dependent on native plant and wildlife. In the US it does not have any natural predators. [Photo by Eli Christman and shared under a Creative Commons 2.0 license. Original photo here: https://flic.kr/p/abgksv]

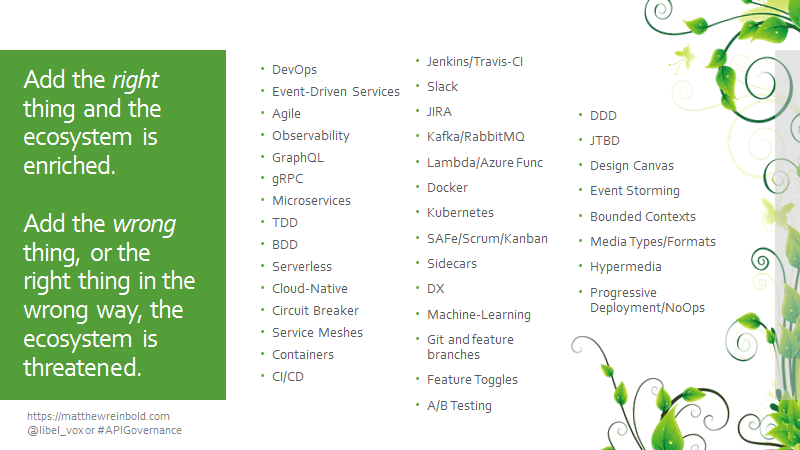

The modern software development environment has also been one of increasing complexity. On this page are a variety of processes, techniques, frameworks, technologies, and styles. The last decade has been marked by creating incrementally smaller deployable units. The tradeoff of increasingly granular executables is a non-linear increase in configuration and communication management. Today's modern development environment includes: This trend results in complexity, and complexity leads to emergent behaviors, or behaviors that may not be easily addressed with linear thinking. It is an ecosystem. How does one go about determining the right fit? Which of these things will be around two years from now? Five? Is there a way of identifying those things, like Kudzu, which seem to have short term benefits and long term ramifications? How do we identify complimentary species to our ecosystems and those that will unbalance it?

A forest is a complex ecosystem. Think about how it handles change. There is a size hierarchy: pine needle, tree crown, patch, stand, forest, and biome. Those differences in scale also form a time hierarchy; said differently, as we increase in scope, we also increase in time frames of reference: What can happen in the short term is constrained by the larger, slower moving concepts. The range of what the needle may do is constrained by the tree crown, which is constrained by the patch and stand, which are controlled by the forest, which is controlled by the biome. It is a complex ecosystem. But it is not static. Innovation happens throughout the system via evolutionary competition among individual trees. Each tree survives or dies dealing with the stresses of crowding, parasites, predators, and weather. [Photo by Julien R on Unsplash]

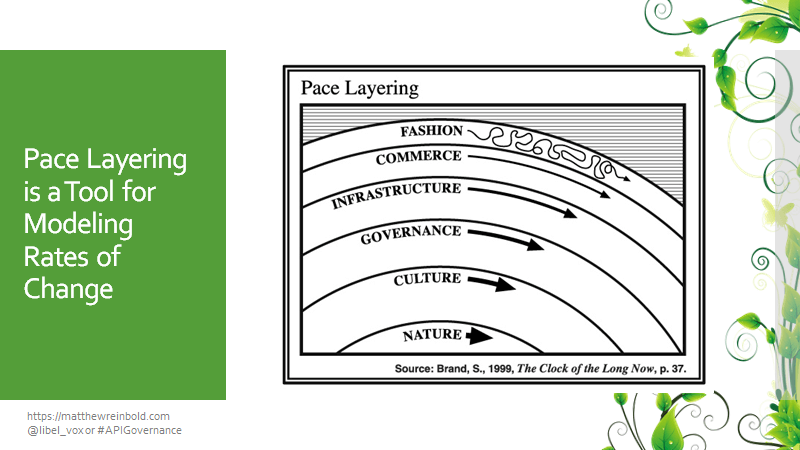

Stewart Brand's Pace Layer model is an attempt to capture this layering. "Pace Layers" appeared in Stewart's 1999 book, The Clock of the Long Now, and was an extension of the architectural concept of "shearing layers". Shearing Layers, a concept coined by architect Frank Duffy, was elaborated on by Stewart in his 1995 book, How Buildings Learn. It describes how buildings are a set of components that evolve in different timescales. The book was, subsequently, turned into a BBC television series that can be viewed online. Different parts of a society, or industry, move at different rates. Concepts at the top of the graph change at a rapid clip. The further down one goes, the slower change occurs. Take, for example, a conference center. It is a complex ecosystem necessary to produce a given outcome: an event. The chairs are reconfigured as need dictates. They'll be swapped out according to fashion. They have rates of change much greater than the carpet or the visual facade, which changes faster than the street ordinances or hospitality laws. All of which changes faster than our cultural tendency of getting together face to face. It is the combination of fast and slow moving parts that gives a system its resiliency. The layers are not independent. As each moves at its own speed, there is tension at the edges where they layers touch, something called "slip zones". Consider any recent emerging technology. Those electric scooters you've probably seen around are in a slip zone. Will they decend beyond a fad, becoming part of a city's transportation infrastructure, perhaps even requiring governance, on the way to becoming a cultural touchstone? Or is it a fad, resulting in some commerce but disappearing after the VC money runs out? * Fidget spinners were a fad. They decended to the commerace layer as everyone sought to sell some product. But they never became part of people's routines (their habitual infrastructure). There was no need for governance, and they never embedded themselves in cultural identity. As a result, the ubiquitous thing one summer is easily disposed of and replaced by the next.

This is such a beautiful summary, it is worth repeating. "The fast parts learn. The slow parts remember. The fast parts propose things, the slow parts dispose things. The fast and small instruct the slow and big with accrued innovations and occasional revolutions. At the same time, and we don't respect this as much as we should, the big and the slow control the fast and the small with constraints and with constancy. All the attention is paid to the fast parts. But all the power is in the slow parts." "Each layer needs to respect each other's pace. If commerce is too dominate in a society, it can jerk governance around harmfully. Or it can reach down and disrupt culture and nature by going too fast while not having the patience to deal with infrastructure problems. The flip side is where the Soviet Union tried to run everything at governance pace (five year plans, for example) and they destroyed other layers."

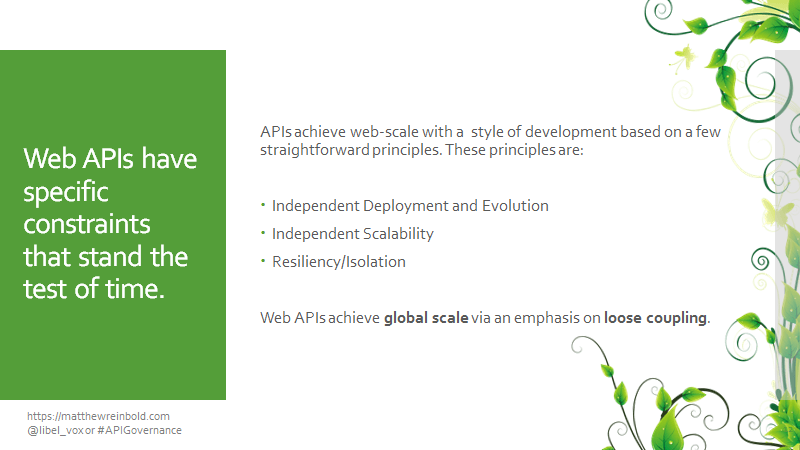

So let's start to bring this together. The web as a platform is a result of its architectural simplicity, the use of a widely implemented and agreed-upon protocol (HTTP), and the pervasiveness of common representation formats (JSON). Fashion (gRPC, GraphQL, RPC, SOA, etc.) will come and go. However, creating distributed systems with web-based APIs in the same manner of the web will continue because of foundation in something deeper, more substantial. This is because of web principles like: The key for distributed systems success is a few well-known actions and the application-specific interpretation of resource representations.

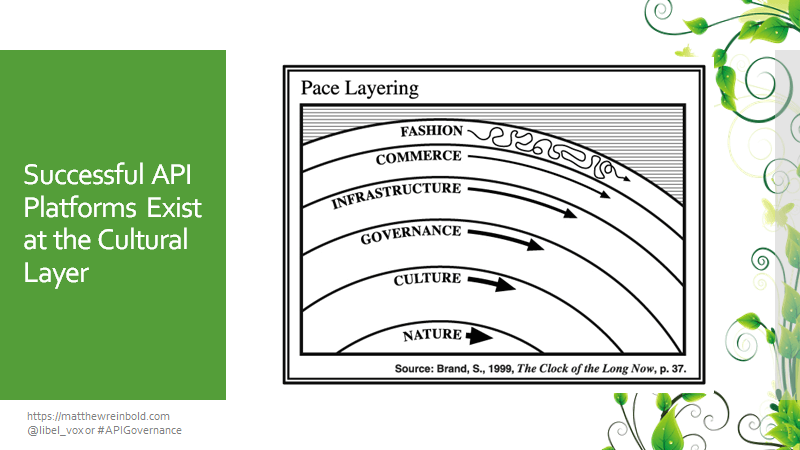

According to the book, ‘The Modern Firm', by John Roberts, culture is three things: people, architectures, and routines. A software development culture is not ping pong tables and keg stand Fridays. Culture is not open floor plans or the glowing adjectives used in press releases. Culture is how a company behaves when stressed. By mimicking the web, and embracing people, architectures, and routines that promote loose coupling, individual scaling, and resiliency between individual elements, the company I work for has embraced an API culture for sustaining innovation. How successful would a garden be if every plant had to grow at the same rate? If the entire ecosystem was comprised of a single flower? We nurture an ecosystem that is diverse, has a degree of independence, but is mutually responsible to the whole. More importantly, knock on wood, that firmament will remain stable while we evaluate new technology fashions that arise. The faster layers propose things. But we're not beholden to try everything. The slower-moving, cultural layer constrains the faster moving layers for the stability of the entire system. That said, how do we use this when evaluating change?

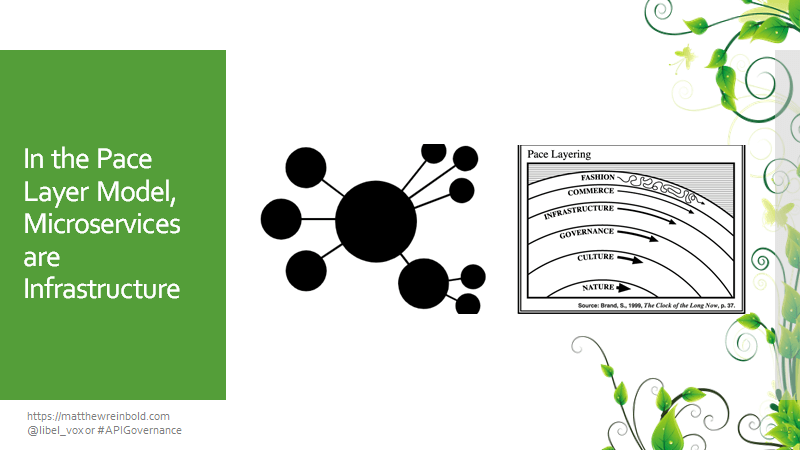

Let's look at two comparable, but different, API-related items. On the surface, we might lump things like hypermedia and microservices together. They are both popular topics on the API speaking circuit over the last half decade and, in a rush, we can hand-wave at them as "web-API" things. I've written my email newsletter, NetAPI Notes, for the last several years. I consume numerous blog posts, slide decks, and video recordings every week in an attempt to share only the best, most salient information with my busy audience. In parsing all that material, it has become clear that microservices has taken root across software development shops in a way that hypermedia hasn't. It doesn't mean that hypermedia is a bad idea - far from it. And yet, time and time again, these hypermedia seeds cast don't seem to flourish. The Pace Layer model provides a theory.

Microservices are implementation details. They are supposed to be the smallest possible cohesive unit in a system. As Irakli Nadareishvili likes to say, "microservices are not about reuse!" They are about reducing the coordination overhead costs among teams. They are not "little APIs", although they use all of the API infrastructure (the protocols, networking frameworks, etc.) that already exists within a company. They lack exposure (remember, they're not about reuse) in order to maximize their ephemeraility. They are like the chairs in this building. Or the pine needles in the forest. The rate of change is within the infrastructure layer.

Hypermedia is more than just providing links to related information, although it might be pitched that way. At a previous e-commerce job, I was an backend developer and architect for a product perfect for hypermedia. On checkout, the business wanted to dynamically A/B test various upsell offers based on the contents of the cart. Because of the rate at which they wanted to test offers, combined with the dynamic nature of the cart contents, trying to bake every possible upsell paramutation into an executable that went through an app store approval process (itself which might take two weeks) was impossible. Hypermedia is a great solution for "workflow" like events, which the checkout flow primarily was. Each state returned by the server would provide links to the next step. That next step may change, depending on what is being tested. The mobile application would navigate the path presented it, and the business case would be fulfilled. Despite the natural fit, however, the work floundered. Hypermedia is more than just links. Its an inversion of communication flow. Rather than the mobile developer being able to look at the totality of exposed functionality and call their shot, they where now expected to respond to a bread trail they were given. It was a different architecture resulting in a different calling routine for the people involved. As we mentioned from John Robert's definition, people, routines, and architecture are aspects of culture. Hypermedia, done correctly, challenges conventional development paradigms. It requires the appropriate supporting culture, something that moves more slowly to support new forms. It doesn't mean that hypermedia will never happen. What it does mean, however, is that adoption is on the order of a culture change, not infrastructure. Not only does it take longer, but successful culture change also requires a different set of approaches. Note: this is a model. Anyone using a model should be quick to share the George Box quote, "all models are wrong, but some are useful". But despite the fact they are an oversimplified version of reality, a good model let's us see a situation in a new light, and makes us ask different questions in the pursuit of new and/or different insights. Using Stewart Brand's Pace Layering model, I conclude that there haven't been many compelling cases of hypermedia because the layer that needs to change, an organization's culture, moves at a much slower rate in adopting new things. Changing culture is more challenging than changing infrastructure.

I'll leave the exercise of walking through GraphQL, what change layer it is most likely to be, and the future ramifications to the reader. However, if you do that, keep the following questions in mind: For example, consider a company where GraphQL is being considered. Perhaps previously created REST-ish APIs have had poor bounded contexts, ill fitting with desired business experiences. But if the culture of a company (the people, routines, and architectures) can't accruately articulate well isolated, composed pieces of business functionality, what happens if the culture stays the same and we swap infrastructure, instead? Your mileage may vary. But I'd argue that, in the above example, you've conceded defeat and pushed the hard work of creating well-understood business interactions onto the integrating client. Is that a sustainable decision for your ecosystem? Or, over time, will we look back on this as a moment where Kudzu was encouraged?

We work in an industry proficient in eating its young, of chasing the dragon of the ‘next big thing', of disposing perfectly workable solutions in the pursuit of the next silver bullet. We can't (or want to) stop the growth of our ecosystems. Excellence within our software development circles will remain an ongoing, evolving conversation with constant change. But we also don't have to accept a binge-purge cycle of architectural violence as a given. We can break that pattern. We can stand on the shoulders of giants, rather than repeatedly attempting to chop them off at the knees. Like attentive gardeners, we can carefully cultivate dynamic, complex environments where innovation can happen, while being wary to threats that might jeopardize the balance.

Thank you for your attention.

* Update 2023-12-20: Scooter startup Bird has declared bankruptcy.