"AI CREATES MOVIE TRAILER!" yells the headline. IBM's Watson, looking for something else to do besides win Jeopardy, is now making movie trailers. The trailer by itself, for a horror movie called Morgan, isn't anything special: some off putting music, dark sets, and implied danger. It would just be an ok achievement for a computer until one reads the fine print: Watson actually selected six minutes of scenes it identified as potentially important, and then a human editor composed the resulting minute and a half, paired it with music, and then published the press release proclaiming the marketing gimmick finished.

*slow clap*

A much better example of where machine intelligence is applied is the short film Sunspring. The script was created by feeding numerous "sci-fi" screenplays through an algorithm. The result was then interpreted by humans - a director, cinematographer, costumers, actors, scorers - to give it (some?) coherent narrative. It is interesting. However, it clearly shows the limits even the state-of-the-art is capable of. There's a difference between pattern matching and creativity.

The Turing Test is a recently released game that explores these issues in more depth. The game mentions the Chinese Room Argument, a thought experiment from the early 80s attributed to John Searle. In the theoretical situation a non-Chinese speaker is put into a room. Messages, written in Chinese, are passed into the room and responses are required. While the individual inside doesn't understand the contents of what they are given, they have book where they can match the characters they receive with correct responses. Once they find the correct pattern, they record a copy of the response to be passed back out.

In the experiment, is the individual in the room "speaking" Chinese? To those outside the room, it may appear that a conversation is happening. The person inside, however, has no understanding of what is being said.

That, ultimately, explains why Sunspring is so odd. The machine learning algorithm was told to find significant patterns in the training data. But while it understood the syntax of language, it has no semantics; it doesn't understand what the words mean.

There have been tremendous strides in the field of machine learning. Those are heavily contrasted with some astonishing setbacks. To this point, however, what is often trumpeted as "AI" is still very much just a more efficient Chinese room participant. We are not yet in the presence of true, creative AI. Even then, the form that it takes will (most likely) be altogether alien, bordering on unrecognizable.

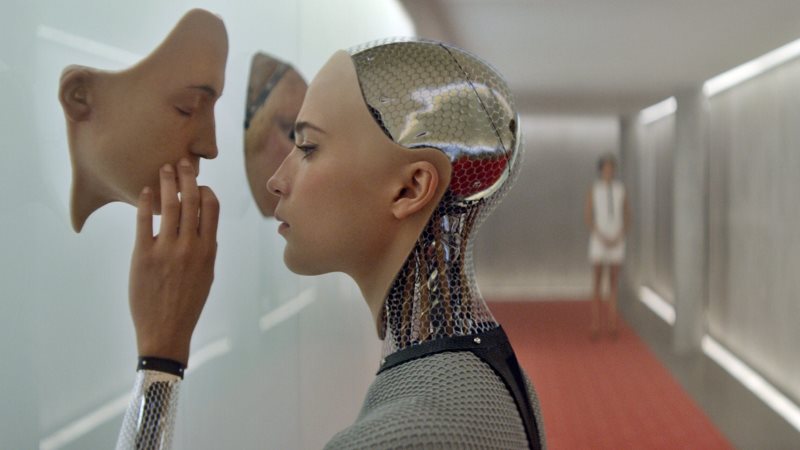

We get a moment of that in Ex Machina (2015). (If you haven't seen it yet this is your spoiler warning; further discussion of the film discusses important plot points of the third act.) Nearing the end, Ava (Alicia Vikander) carries out her escape from her creator with the help of Caleb (Domhnall Gleeson). She engineers a particularly brutal death of Nathan, her maker, played by Oscar Isaac. While the violence is shocking, the result isn't: Ava is exerting a justice that jives with most people's moral code.

But it is the next action that chills to the bone. Millions of years of evolution have ingrained certain expectations about correct behavior. Humans yearn for fairness. When we perceive a situation as "unfair" we tend to get testy. Caleb risks a tremendous amount to free Ava. As a result, we expect a happy ending for him; as the film's "hero" that would be fair. Yet Ava leaves him to die not because she has to, but because she can. The cold calculation of that moment is as alien and terrifying as anything else in the film.

In a New America AI conversation with author Charles Stross, he made the argument that we are already in the presence of AI: the Limited Liability Corporation. Legally, they are treated as people. They acquire resources to sustain their life. They grow, they mature, and - in some cases, even die. In Stross's tour-de-force singularity book, Accelerando (Creative Commons Download Available), we don't find other alien species as we reach into space, but business algorithms continuing to churn long after their creators had passed from existence:

"Basically, sufficiently complex resource-allocation algorithms reallocate scarce resources ... and if you don't jump to get out of their way, they'll reallocate you. I think that's what happened inside the Matrioshka brain we ended up in: Judging by the Slug it happens elsewhere, too. You've got to wonder where the builders of that structure came from. And where they went. And whether they realized that the destiny of intelligent tool-using life was to be a stepping-stone in the evolution of corporate instruments."

Today's showy attempts at machine intelligence are attempts at creating artifacts for people. They, like the participants in the Chinese room experiment, are being given a string of characters. They respond with what, they are told, are pleasing responses. When our constructs begin to reshape culture for their own, and most likely unrecognizable, ends - that is the point when we are confronted with true AI. And, like Caleb's imprisonment by Ava, that realization will come too late.

Update 7/31/2017: Maciej Cegłowski, the creator of Pinboard and notable social critic, posted the slides to his wonderful talk, “Superintelligence - The Idea that Eats Smart People”. Some well reasoned (and reassuring) thoughts on AI. Or at least that’s what the machines want us to think…